- Research Article

- Open access

- Published:

Multiaccess Channels with State Known to Some Encoders and Independent Messages

EURASIP Journal on Wireless Communications and Networking volume 2008, Article number: 450680 (2008)

Abstract

We consider a state-dependent multiaccess channel (MAC) with state noncausally known to some encoders. For simplicity of exposition, we focus on a two-encoder model in which one of the encoders has noncausal access to the channel state. The results can in principle be extended to any number of encoders with a subset of them being informed. We derive an inner bound for the capacity region in the general discrete memoryless case and specialize to a binary noiseless case. In binary noiseless case, we compare the inner bounds with trivial outer bounds obtained by providing the channel state to the decoder. In the case of maximum entropy channel state, we obtain the capacity region for binary noiseless MAC with one informed encoder. For a Gaussian state-dependent MAC with one encoder being informed of the channel state, we present an inner bound by applying a slightly generalized dirty paper coding (GDPC) at the informed encoder and a trivial outer bound by providing channel state to the decoder also. In particular, if the channel input is negatively correlated with the channel state in the random coding distribution, then GDPC can be interpreted as partial state cancellation followed by standard dirty paper coding. The uninformed encoders benefit from the state cancellation in terms of achievable rates, however, it seems that GDPC cannot completely eliminate the effect of the channel state on the achievable rate region, in contrast to the case of all encoders being informed. In the case of infinite state variance, we provide an inner bound and also provide a nontrivial outer bound for this case which is better than the trivial outer bound.

1. Introduction

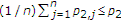

We consider a state-dependent multiaccess channel (MAC) with noiseless channel state noncausally known to only some, but not all, encoders. The simplest example of a communication system under investigation is shown in Figure 1, in which two encoders communicate to a single decoder through a state-dependent MAC  controlled by the channel state

controlled by the channel state  . We assume that one of the encoders has noncausal access to the noiseless channel state. The results can in principle be extended to any number of encoders with a subset of them being informed of the noiseless channel state. The informed encoder and the uninformed encoder want to send messages

. We assume that one of the encoders has noncausal access to the noiseless channel state. The results can in principle be extended to any number of encoders with a subset of them being informed of the noiseless channel state. The informed encoder and the uninformed encoder want to send messages  and

and  , respectively, to the decoder in

, respectively, to the decoder in  channel uses. The informed encoder, provided with both

channel uses. The informed encoder, provided with both  and the channel state

and the channel state  , generates the codeword

, generates the codeword  . The uninformed encoder, provided only with

. The uninformed encoder, provided only with  , generates the codeword

, generates the codeword  . The decoder, upon receiving the channel output

. The decoder, upon receiving the channel output  , estimates both messages

, estimates both messages  and

and  from

from  . In this paper, our goal is to study the capacity region of this model.

. In this paper, our goal is to study the capacity region of this model.

1.1. Motivation

State-dependent channel models with state available at the encoder can be used to model IE [1–4]. Information embedding (IE) is a recent area of digital media research with many applications, including: passive and active copyright protection (digital watermarking); embedding important control, descriptive, or reference information into a given signal; and covert communications [5]. IE enables encoding a message into a host signal (digital image, audio, video) such that it is perceptually and statistically undetectable. Given the various applications and advantages of IE, it is important to study fundamental performance limits of these schemes. The information theory community has been studying performance limits of such models in which random parameters capture fading in a wireless environment, interference from other users [6], or the host sequence in IE and date hiding applications [1–4, 7].

The state-dependent models with channel state available at the encoders can also be used to model communication systems with cognitive radios. Because of growing demand for bandwidth in wireless systems, some secondary users with cognitive capabilities are introduced into an existing primary communication system to use the frequency spectrum more efficiently [8]. These cognitive devices are supposed to be capable of obtaining knowledge about the primary communication that takes place in the channel and adapt their coding schemes to remove the effect of interference caused by the primary communication systems to increase spectral efficiency. The state in such models can be viewed as the signal of the primary communication that takes place in the same channel, and the informed encoders can be viewed as cognitive users. The model considered in the paper can be viewed as a secondary multiaccess communication system with some cognitive and noncognitive users introduced into the existing primary communication system. The cognitive users are capable of noncausally obtaining the channel state or the signal of the primary communication system. In this paper, we are interested in studying the achievable rates of the secondary multiaccess communication system with some cognitive users. Joint design of the primary and the secondary networks is studied in [9, 10].

1.2. Background

The study of state-dependent models or channels with random parameters, primarily for single-user channels, is initiated with Shannon himself. Shannon studies the single-user discrete memoryless (DM) channels  with causal channel state at the encoder [11]. Here,

with causal channel state at the encoder [11]. Here,  ,

,  , and

, and  are the channel input, output, and state, respectively. Salehi studies the capacity of these models when different noisy observations of the channel state are causally known to the encoder and the decoder [12]. Caire and Shamai extend the results of [12] to channels with memory [13].

are the channel input, output, and state, respectively. Salehi studies the capacity of these models when different noisy observations of the channel state are causally known to the encoder and the decoder [12]. Caire and Shamai extend the results of [12] to channels with memory [13].

Single-user DM state-dependent channels with memoryless state noncausally known to the encoder are studied in [14, 15] in the context of computer memories with defects. Gelfand-Pinsker derive the capacity of these models, which is given by [16]

where  is an auxiliary random variable, and

is an auxiliary random variable, and  is a deterministic function of

is a deterministic function of  . Single-user DM channels with two state components, one component noncausally known to the encoder and another component known to the decoder, are studied in [17].

. Single-user DM channels with two state components, one component noncausally known to the encoder and another component known to the decoder, are studied in [17].

Costa studies the memoryless additive white Gaussian state-dependent channel of the form  , where

, where  is the channel input with power constraint

is the channel input with power constraint  ,

,  is the memoryless state vector whose elements are noncausally known to the encoder and are zero-mean Gaussian random variables with variance

is the memoryless state vector whose elements are noncausally known to the encoder and are zero-mean Gaussian random variables with variance  , and

, and  is the memoryless additive noise vector whose elements are zero-mean Gaussian random variables with variance

is the memoryless additive noise vector whose elements are zero-mean Gaussian random variables with variance  and are independent of the channel input and the state. The capacity of this model is given by [18]

and are independent of the channel input and the state. The capacity of this model is given by [18]

In terms of the capacity, the result (2) indicates that noncausal state at the encoder is equivalent to state at the decoder or no state in the channel. The so-called dirty paper coding (DPC) scheme used to achieve capacity (2) suggests that allocating power for explicit state cancellation is not optimal, that is, the channel input  is uncorrelated with the channel state

is uncorrelated with the channel state  in the random coding distribution [18].

in the random coding distribution [18].

For state-dependent models with noncausal state at the encoder, although much is known about the single user case, the theory is less well developed for multiuser cases. Several groups of researchers [19, 20] study the memoryless additive Gaussian state-dependent MAC of the form  , where

, where  and

and  are the channel inputs with average power constraints

are the channel inputs with average power constraints  and

and  , respectively,

, respectively,  is the memoryless channel state vector whose elements are noncausally known at both the encoders and are zero-mean Gaussian random variables with variance

is the memoryless channel state vector whose elements are noncausally known at both the encoders and are zero-mean Gaussian random variables with variance  , and

, and  is the memoryless additive noise vector whose elements are zero-mean Gaussian random variables with variance

is the memoryless additive noise vector whose elements are zero-mean Gaussian random variables with variance  and are independent of the channel inputs and the channel state. The capacity region of this model is the set of rate pairs

and are independent of the channel inputs and the channel state. The capacity region of this model is the set of rate pairs  satisfying

satisfying

As in the single-user Gaussian model, the capacity region (3) indicates that the channel state has no effect on the capacity region if it is noncausally known to both the encoders. Similar to the single-user additive Gaussian models with channel state, DPC at both the encoders achieves (3) and explicit state cancellation is not optimal in terms of the capacity region. It is interesting to study the capacity region for the Gaussian MAC with noncausal channel state at one encoder because DPC cannot be applied at the uninformed encoder.

For the DM case, the state-dependent MAC with state at one encoder is considered in [21–23] when the informed encoder knows the message of the uninformed encoder. For the Gaussian case in the same scenario, the capacity region is obtained in [22, 24] by deriving nontrivial outer bounds. It is shown that the generalized dirty paper coding (GDPC) achieves the capacity region. The model considered in this paper from the view of lattice coding is also considered in [25]. Cemal and Steinberg study the state-dependent MAC in which rate-constrained state is known to the encoders and full state is known to the decoder [26]. State-dependent broadcast channels with state available at the encoder have also been studied in the DM case [27, 28] and the Gaussian case [29].

1.3. Main Contributions and Organization of the Paper

We derive an inner bound for the model shown in Figure 1 for the DM case and then specialize to a binary noiseless case. General outer bounds for these models have been obtained in [30], however, at present, these bounds do not coincide with our inner bounds and are not computable due to lack of bounds on the cardinalities of the auxiliary random variables. In binary noiseless case, the informed encoder uses a slightly generalized binary DPC, in which the random coding distribution has channel input random variable correlated to the channel state. If the binary channel state is a Bernoulli random variable with

random variable with  , we compare the inner bound with a trivial outer bound obtained by providing the channel state to the decoder, and the bounds do not meet. If

, we compare the inner bound with a trivial outer bound obtained by providing the channel state to the decoder, and the bounds do not meet. If  , we obtain the capacity region by deriving a nontrivial outer bound.

, we obtain the capacity region by deriving a nontrivial outer bound.

We also derive an inner bound for an additive white Gaussian state-dependent MAC similar to [19, 20], but in the asymmetric case in which one of the encoders has noncausal access to the state. For the inner bound, the informed encoder uses a generalized dirty paper coding (GDPC) scheme in which the random coding distribution exhibits arbitrary correlation between the channel input from the informed encoder and the channel state. The inner bound using GDPC is compared with a trivial outer bound obtained by providing channel state to the decoder. If the channel input from the informed encoder is negatively correlated with the channel state, then GDPC can be interpreted as partial state cancellation followed by standard dirty paper coding. We observe that, in terms of achievable rate region, the informed encoder with GDPC can assist the uninformed encoders. However, in contrast to the case of channel state available at all the encoders [19, 20], it appears that GDPC cannot completely eliminate the effect of the channel state on the capacity region for the Gaussian case.

We also study the Gaussian case if the channel state has asymptotically large variance  , that is,

, that is,  . Interestingly, the uninformed encoders can benefit from the informed encoder's actions. In contrast to the case of

. Interestingly, the uninformed encoders can benefit from the informed encoder's actions. In contrast to the case of  in which the informed encoder uses GDPC, we show that the standard DPC is sufficient to help the uninformed encoder as

in which the informed encoder uses GDPC, we show that the standard DPC is sufficient to help the uninformed encoder as  . In this latter case, explicit state cancellation is not useful because it is impossible to explicitly cancel the channel state using the finite power of the informed encoder.

. In this latter case, explicit state cancellation is not useful because it is impossible to explicitly cancel the channel state using the finite power of the informed encoder.

We organize the rest of the paper as follows. In Section 2, we define some notation and the capacity region. In Section 3, we study a general inner bound for the capacity region of the model in Figure 1 for a DM MAC and also specialize to a binary noiseless case. In this section, we also derive the capacity region of the binary noiseless MAC with maximum entropy channel state. In Section 4, we study inner and outer bounds on the capacity region of the model in Figure 1 for a memoryless Gaussian state-dependent MAC and also study the inner and outer bounds for the capacity region of this model in the case of large channel state variance. Section 5 concludes the paper.

2. Notations and Definitions

Throughout the paper, the notation  is used to denote the realization of the random variable

is used to denote the realization of the random variable  . The notation

. The notation  represents the sequence

represents the sequence  and the notation

and the notation  represents the sequence

represents the sequence  . Calligraphic letters are used to denote the random variable's alphabet, for example,

. Calligraphic letters are used to denote the random variable's alphabet, for example,  . The notation

. The notation  and

and  denote the closure operation and convex hull operation on set

denote the closure operation and convex hull operation on set  , respectively.

, respectively.

We consider a memoryless state-dependent MAC, denoted  , whose output

, whose output  is controlled by the channel input pair

is controlled by the channel input pair  and along with the channel state

and along with the channel state  . These alphabets are discrete sets and the set of real numbers for the discrete case and the Gaussian case, respectively. We assume that

. These alphabets are discrete sets and the set of real numbers for the discrete case and the Gaussian case, respectively. We assume that  at any time instant

at any time instant  is identically independently drawn (i.i.d.) according to a probability law

is identically independently drawn (i.i.d.) according to a probability law  . As shown in Figure 1, the state-dependent MAC is embedded in some environment in which channel state is noncausally known to one encoder.

. As shown in Figure 1, the state-dependent MAC is embedded in some environment in which channel state is noncausally known to one encoder.

The informed encoder, provided with the noncausal channel state, wants to send message  to the decoder and the uninformed encoder wants to send

to the decoder and the uninformed encoder wants to send  to the decoder. The message sources at the informed encoder and the uninformed encoder produce random integers

to the decoder. The message sources at the informed encoder and the uninformed encoder produce random integers

respectively, at the beginning of each block of  channel uses. We assume that the messages are independent and the probability of each pair of messages

channel uses. We assume that the messages are independent and the probability of each pair of messages  is given by

is given by  .

.

Definition 1.

A  code consists of encoding functions

code consists of encoding functions

at the informed encoder and the uninformed encoder, respectively, and a decoding function

where

From a  code, the sequences

code, the sequences  and

and  from the informed encoder and the uninformed encoder, respectively, are transmitted without feedback across a state-dependent MAC

from the informed encoder and the uninformed encoder, respectively, are transmitted without feedback across a state-dependent MAC  modeled as a discrete memoryless conditional probability distribution, so that

modeled as a discrete memoryless conditional probability distribution, so that

The decoder, upon receiving the channel output  , attempts to reconstruct the messages. The average probability of error is defined as

, attempts to reconstruct the messages. The average probability of error is defined as

Definition 2.

A rate pair  is said to be achievable if there exists a sequence of

is said to be achievable if there exists a sequence of  codes

codes  with

with

Definition 3.

The capacity region  is the closure of the set of achievable rate pairs

is the closure of the set of achievable rate pairs  .

.

Definition 4.

For given  and

and  , let

, let  be the collection of random variables

be the collection of random variables  with probability laws

with probability laws

where  and

and  are auxiliary random variables.

are auxiliary random variables.

3. Discrete Memoryless Case

In this section, we derive an inner bound for the capacity region of the model shown in Figure 1 for a general DM MAC and then specialize to a binary noiseless MAC. In this section, we consider  ,

,  ,

,  , and

, and  to all be discrete and finite alphabets, and all probability distributions are to be interpreted as probability mass functions.

to all be discrete and finite alphabets, and all probability distributions are to be interpreted as probability mass functions.

3.1. Inner Bound for the Capacity Region

The following theorem provides an inner bound for the DM case.

Theorem 1.

Let  be the closure of all rate pairs

be the closure of all rate pairs  satisfying

satisfying

for some random vector  , where

, where  and

and  are auxiliary random variables with

are auxiliary random variables with  and

and  , respectively. Then the capacity region

, respectively. Then the capacity region  of the DM MAC with one informed encoder satisfies

of the DM MAC with one informed encoder satisfies  .

.

Proof.

The above inner bound can be proved by essentially combining random channel coding for the DM MAC [31] and random channel coding with noncausal state at the encoders [16]. For completeness, a proof using joint decoding is given in Appendix A.1.

Remarks

-

(i)

The inner bound of Theorem 1 can be obtained by applying Gel'fand-Pinsker coding [16] at the informed encoder. At the uninformed encoder, the codebook is generated in the same way as for a regular DM MAC [31].

-

(ii)

The region

in Theorem 1 is convex due to the auxiliary time-sharing random variable

in Theorem 1 is convex due to the auxiliary time-sharing random variable  .

. -

(iii)

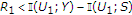

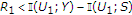

The inner bound

of Theorem 1 can also be obtained by time-sharing between two successive decoding schemes, that is, decoding one encoder's message first and using the decoded codeword and the channel output to decode the other encoder's message. On one hand, consider first decoding the message of the informed encoder. Following [16], if

of Theorem 1 can also be obtained by time-sharing between two successive decoding schemes, that is, decoding one encoder's message first and using the decoded codeword and the channel output to decode the other encoder's message. On one hand, consider first decoding the message of the informed encoder. Following [16], if  , we can decode the codeword

, we can decode the codeword  of the informed encoder with arbitrarily low probability of error. Now, we use

of the informed encoder with arbitrarily low probability of error. Now, we use  along with

along with  to decode

to decode  . Under these conditions, if

. Under these conditions, if  , then we can decode the message of the uninformed encoder with arbitrarily low probability of error. On the other hand, if we change the decoding order of the two messages, the constraints are

, then we can decode the message of the uninformed encoder with arbitrarily low probability of error. On the other hand, if we change the decoding order of the two messages, the constraints are  and

and  . By time-sharing between these two successive decoding schemes and taking the convex closure, we can obtain the inner bound

. By time-sharing between these two successive decoding schemes and taking the convex closure, we can obtain the inner bound  of Theorem 1.

of Theorem 1.

3.2. Binary Noiseless Example

In this section, we specialize Theorem 1 to a binary noiseless state-dependent MAC of the form  where

where  and

and  are channel inputs with the number of binary ones in

are channel inputs with the number of binary ones in  and

and  less than or equal to

less than or equal to  ,

,  , and

, and  ,

,  , respectively;

, respectively;  is the memoryless state vector whose elements are noncausally known to one encoder and are i.i.d. Bernoulli

is the memoryless state vector whose elements are noncausally known to one encoder and are i.i.d. Bernoulli random variables,

random variables,  ; and

; and  represents modulo-2 addition. By symmetry, we assume that

represents modulo-2 addition. By symmetry, we assume that  ,

,  , and

, and  .

.

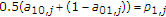

3.2.1. Inner and Outer Bounds

The following corollary gives an inner bound for the capacity region of the binary noiseless MAC by applying a slightly generalized binary DPC at the informed encoder in which the channel input  and the channel state

and the channel state  are correlated.

are correlated.

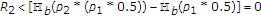

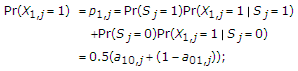

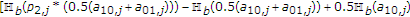

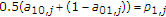

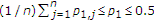

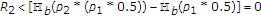

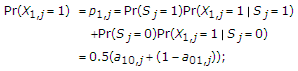

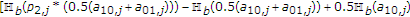

Definition 5.

Let  be the set of all rate pairs

be the set of all rate pairs  satisfying

satisfying

for  , where

, where

and  , and

, and  Let

Let

Corollary 1.

The capacity region  for the binary noiseless state-dependent MAC with one informed encoder satisfies

for the binary noiseless state-dependent MAC with one informed encoder satisfies  .

.

Proof.

Encoding and decoding are similar to encoding and decoding explained for the general DM case above. The informed encoder uses generalized binary DPC in which the random coding distribution allows arbitrary correlation between the channel input from the informed encoder and the known state. We consider  and

and  , where

, where  is related to

is related to  by

by  and

and  with

with  and

and  chosen such that

chosen such that  . We compute the region

. We compute the region  defined in (1) using the probability mass function of

defined in (1) using the probability mass function of  and the auxiliary random variable

and the auxiliary random variable  for all

for all  , and deterministic

, and deterministic  to obtain the region

to obtain the region  in (9). We use deterministic

in (9). We use deterministic  to compute the region in the binary case because we explicitly take the convex hull of unions of the regions computed with distributions. This completes the proof.

to compute the region in the binary case because we explicitly take the convex hull of unions of the regions computed with distributions. This completes the proof.

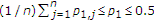

The following proposition provides a trivial outer bound for the capacity region of the binary noiseless MAC with one informed encoder. We do not provide a proof because this outer bound can be easily obtained if we provide the channel state to the decoder.

Proposition 1.

Let  be the set of all rate pairs

be the set of all rate pairs  satisfying

satisfying

Then the capacity region  for the binary noiseless MAC with one informed encoder satisfies

for the binary noiseless MAC with one informed encoder satisfies  .

.

3.2.2. Numerical Example

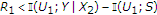

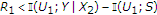

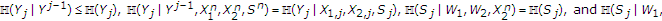

Figure 2 depicts the inner bound using generalized binary DPC specified in Corollary 1 and the outer bound specified in Proposition 1 for the case in which  ,

,  , and

, and  . Also shown for comparison are the following: an inner bound using binary DPC alone, or the generalized DPC with

. Also shown for comparison are the following: an inner bound using binary DPC alone, or the generalized DPC with  and

and  ; and the inner bound for the capacity region of the case in which the state is known to neither the encoders nor the decoder.

; and the inner bound for the capacity region of the case in which the state is known to neither the encoders nor the decoder.

These results show that the inner bound obtained by generalized binary DPC is larger than that obtained using binary DPC [32], and suggest that the informed encoder can help the uninformed encoder using binary DPC [32] as well as generalized binary DPC. Even though state is known to only one encoder, both the encoders can benefit in terms of achievable rates compared to the case in which the channel state is unavailable at the encoders and the decoder.

3.2.3. Maximum Entropy State

In this section, we discuss how the uninformed encoder benefits from the actions of the informed encoder even if  so that

so that  . The following corollary provides the capacity region of the noiseless binary MAC with one informed encoder in this case.

. The following corollary provides the capacity region of the noiseless binary MAC with one informed encoder in this case.

Corollary 2.

For the given input constraints  and

and  , the capacity region of the binary noiseless MAC with one informed encoder is the set of rate pairs

, the capacity region of the binary noiseless MAC with one informed encoder is the set of rate pairs  satisfying

satisfying

Proof.

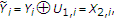

The region (2) is achieved if the informed encoder employs the generalized binary DPC with  and

and  or the standard binary DPC. We obtain (14) from (10) by substituting

or the standard binary DPC. We obtain (14) from (10) by substituting  and

and  in (10). A converse proof for the above capacity region is given in Appendix A.2.

in (10). A converse proof for the above capacity region is given in Appendix A.2.

Remarks

-

(i)

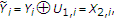

From (14), we see that the uninformed encoder can achieve rates below

though the channel has maximum entropy state. Let us investigate how the uninformed encoder can benefit from the informed encoder's actions even in this case using successive decoding in which

though the channel has maximum entropy state. Let us investigate how the uninformed encoder can benefit from the informed encoder's actions even in this case using successive decoding in which  is decoded first using

is decoded first using  and then

and then  is decoded using

is decoded using  and

and  . The informed encoder applies the standard binary DPC, that is,

. The informed encoder applies the standard binary DPC, that is,  and

and  in generalized binary DPC, to generate its codewords, and the uninformed encoder uses a Bernoulli

in generalized binary DPC, to generate its codewords, and the uninformed encoder uses a Bernoulli random variable to generate its codewords, where

random variable to generate its codewords, where  . In the case of maximum entropy state,

. In the case of maximum entropy state,  can be decoded first with arbitrary low probability of error if

can be decoded first with arbitrary low probability of error if  satisfies

satisfies  (15)

(15)for

and

and  . The channel output can be written as

. The channel output can be written as  because

because  for

for  . Using

. Using  , we can generate a new channel output for decoding

, we can generate a new channel output for decoding  as

as (16)

(16)for

. Since there is no binary noise present in

. Since there is no binary noise present in  for decoding

for decoding  , the message of the uninformed encoder can be decoded with arbitrarily low probability of error if

, the message of the uninformed encoder can be decoded with arbitrarily low probability of error if  for

for  satisfying both

satisfying both  and

and  .

.Then the bound on

can be written as

can be written as (17)

(17)If

, we can achieve

, we can achieve  as if there were no state in the channel, though the maximum entropy binary state is present in the channel and the state is not known to the uninformed encoder. If

as if there were no state in the channel, though the maximum entropy binary state is present in the channel and the state is not known to the uninformed encoder. If  , the uninformed encoder can still achieve positive rates, that is,

, the uninformed encoder can still achieve positive rates, that is,  .

. -

(ii)

Let us now discuss how the informed encoder achieves rate below

using successive decoding in the reverse order, that is,

using successive decoding in the reverse order, that is,  is decoded first using

is decoded first using  and then

and then  is decoded using

is decoded using  and

and  . If

. If  ,

,  can be decoded with arbitrary low probability of error if

can be decoded with arbitrary low probability of error if  . This means that only

. This means that only  is achievable. Then

is achievable. Then  is achievable with

is achievable with  and

and  .

.

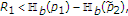

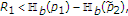

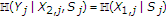

Let us illustrate the case of maximum entropy binary state with numerical examples. Figure 3 illustrates the inner bound given in Corollary 2 for  and

and  in two cases

in two cases  (

( ) and

) and  (

( ). In both cases, these numerical examples suggest that the uniformed encoder achieves positive rates from the actions of the informed encoder as discussed above. In the case of

). In both cases, these numerical examples suggest that the uniformed encoder achieves positive rates from the actions of the informed encoder as discussed above. In the case of  (

( ), the informed encoder can still achieve

), the informed encoder can still achieve  though the channel state has high entropy and is not known to the uninformed encoder, and the informed encoder has input constraint

though the channel state has high entropy and is not known to the uninformed encoder, and the informed encoder has input constraint  .

.

4. Gaussian Memoryless Case

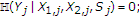

In this section, we develop inner and outer bounds for the memoryless Gaussian case. The additive Gaussian MAC with one informed encoder is shown in Figure 4. The output of the channel is  , where

, where  and

and  are the channel inputs with average power constraints

are the channel inputs with average power constraints  and

and  with probability one, respectively;

with probability one, respectively;  is the memoryless state vector whose elements are zero-mean Gaussian random variables with variance

is the memoryless state vector whose elements are zero-mean Gaussian random variables with variance  ; and

; and  is the memoryless additive noise vector whose elements are zero-mean Gaussian random variables with variance

is the memoryless additive noise vector whose elements are zero-mean Gaussian random variables with variance  and independent of the channel inputs and the state.

and independent of the channel inputs and the state.

4.1. Inner and Outer Bounds on the Capacity Region

The following definition and theorem give an inner bound for the Gaussian MAC with one informed encoder. To obtain the inner bound for this case, we apply generalized dirty paper coding (GDPC) at the informed encoder.

Definition 6.

Let

for a given  , and a given

, and a given  , where

, where

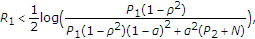

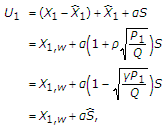

Theorem 2.

Let  be the set of all rate pairs

be the set of all rate pairs  satisfying

satisfying  ,

,  , and

, and  for given

for given  and

and  . Let

. Let

Then the capacity region  of the Gaussian MAC with one informed encoder satisfies

of the Gaussian MAC with one informed encoder satisfies

Proof.

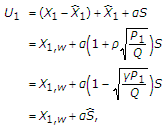

Our results for the DM MAC can readily be extended to memoryless channels with discrete time and continuous alphabets using standard techniques [33]. The informed encoder uses GDPC in which the random coding distribution allows arbitrary correlation between the channel input from the informed encoder and the known channel state. Fix a correlation parameter  . We then consider the auxiliary random variable

. We then consider the auxiliary random variable  , where

, where  is a real number whose range will be discussed later,

is a real number whose range will be discussed later,  and

and  are correlated with correlation coefficient

are correlated with correlation coefficient  ,

,  , and

, and  . We consider

. We consider  . Encoding and decoding are performed similar to the proof of Theorem 1 in Section 3.1 In this case, we assume that

. Encoding and decoding are performed similar to the proof of Theorem 1 in Section 3.1 In this case, we assume that  is deterministic because time-sharing of regions with different distributions is accomplished by explicitly taking convex hull of union of regions with different distributions. We evaluate (9) using the jointly Gaussian distribution of random variables

is deterministic because time-sharing of regions with different distributions is accomplished by explicitly taking convex hull of union of regions with different distributions. We evaluate (9) using the jointly Gaussian distribution of random variables  ,

,  ,

,  ,

,  ,

,  , and

, and  for a given

for a given  and obtain

and obtain  . Also note that we restrict

. Also note that we restrict  to

to  for a given

for a given  . By varying

. By varying  and

and  , we obtain different achievable rate regions

, we obtain different achievable rate regions  . Taking the union of regions

. Taking the union of regions  obtained by varying

obtained by varying  and

and  followed by taking the closure and the convex hull operations completes the proof.

followed by taking the closure and the convex hull operations completes the proof.

Remarks

-

(i)

In both standard DPC [18] and GDPC, the auxiliary random variable is given by

. In GDPC,

. In GDPC,  and

and  are jointly correlated with correlation coefficient

are jointly correlated with correlation coefficient  , whereas in the standard DPC, they are uncorrelated. If the channel input

, whereas in the standard DPC, they are uncorrelated. If the channel input  is negatively correlated with the channel state

is negatively correlated with the channel state  , then GDPC can be viewed as partial state cancellation followed by standard DPC. To see this, let us assume that

, then GDPC can be viewed as partial state cancellation followed by standard DPC. To see this, let us assume that  is negative and denote

is negative and denote  as a linear estimate of

as a linear estimate of  from

from  under the minimum mean square error (MMSE) criterion. Accordingly,

under the minimum mean square error (MMSE) criterion. Accordingly,  . We can rewrite the auxiliary random variable

. We can rewrite the auxiliary random variable  as follows:

as follows:  (21)

(21)where

,

,  can be viewed as the remaining state after state cancellation using power

can be viewed as the remaining state after state cancellation using power  , and

, and  is error with variance

is error with variance  and is uncorrelated with

and is uncorrelated with  . GDPC with negative correlation coefficient

. GDPC with negative correlation coefficient  can be interpreted as standard DPC with power

can be interpreted as standard DPC with power  applied on the remaining state

applied on the remaining state  after state cancellation using power

after state cancellation using power  .

. -

(ii)

In this paper, we focus on the two-encoder model in which one is informed and the other is uninformed, but the concepts can be extended to the model with any number of uninformed and informed encoders. The informed encoders apply GDPC to help the uninformed encoders. Following [19, 20], the informed encoders cannot be affected from the actions of the other informed encoders because the informed encoders can eliminate the effect of the remaining state on their transmission after the state cancellation by them.

The following proposition gives a trivial outer bound for the capacity region of the Gaussian MAC with one informed encoder. We do not provide a proof because this bound is the capacity region of the additive white Gaussian MAC with all informed encoders [19, 20], the capacity region of the additive white Gaussian MAC with state known to only the decoder, and the capacity region of the additive white Gaussian MAC without state.

Proposition 2.

Let  be the set of all rate pairs

be the set of all rate pairs  satisfying

satisfying

Then the capacity region  for the Gaussian MAC with one informed encoder satisfies

for the Gaussian MAC with one informed encoder satisfies  .

.

4.2. Numerical Example

Figure 5 depicts the inner bound using GDPC given in Theorem 2 and the outer bound specified in Proposition 2 for the case in which  ,

,  ,

,  , and

, and  . Also shown for comparison are the following: an inner bound using DPC alone, or GDPC with

. Also shown for comparison are the following: an inner bound using DPC alone, or GDPC with  and

and  as parameter; and the capacity region for the case in which the the state is unavailable at the encoders and the decoder.

as parameter; and the capacity region for the case in which the the state is unavailable at the encoders and the decoder.

These results suggest that the informed encoder can help the uninformed encoder using DPC as well as GDPC. Even though the state is known only at one encoder, both the encoders benefit from this situation by allowing negative correlation between the channel input  and the state

and the state  at the informed encoder, since the negative correlation allows the informed encoder to partially cancel the state. The achievable rate region

at the informed encoder, since the negative correlation allows the informed encoder to partially cancel the state. The achievable rate region  obtained by applying DPC [18] with

obtained by applying DPC [18] with  as a parameter is always contained in

as a parameter is always contained in  in (20). In contrast to the case of state available to both the encoders [19, 20], GDPC is not sufficient to completely mitigate the effect of state on the capacity region.

in (20). In contrast to the case of state available to both the encoders [19, 20], GDPC is not sufficient to completely mitigate the effect of state on the capacity region.

Figure 6 illustrates how the maximum rate of the uninformed encoder  varies with the channel state variance

varies with the channel state variance  if

if  , for

, for  , and

, and  . As shown in Figure 6,

. As shown in Figure 6,  decreases as

decreases as  increases because the variance of remaining state also increases following state cancellation by the informed encoder. The decrease in

increases because the variance of remaining state also increases following state cancellation by the informed encoder. The decrease in  is slower as

is slower as  increases because the informed encoder can help the uninformed encoder more in terms of achievable rates as its power increases.

increases because the informed encoder can help the uninformed encoder more in terms of achievable rates as its power increases.

4.3. Asymptotic Analysis

In this section, we discuss the inner bound in Theorem 2 as  .

.

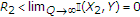

Definition 7.

Let  be the set of all rate pairs

be the set of all rate pairs  satisfying

satisfying  ,

,  , and

, and  for a given

for a given  and

and  , where

, where

(  for

for  is defined in (18) and is a function of

is defined in (18) and is a function of  , though variable

, though variable  is not mentioned in the notation

is not mentioned in the notation  .)

.)

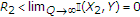

Corollary 3.

As the variance of the state becomes very large, that is,  , an inner bound for the capacity region of the Gaussian MAC with one informed encoder is given by

, an inner bound for the capacity region of the Gaussian MAC with one informed encoder is given by

Remarks

-

(i)

Let us investigate how the uninformed encoder can benefit from the informed encoder's actions even as

. For this discussion, consider successive decoding in which the auxiliary codeword

. For this discussion, consider successive decoding in which the auxiliary codeword  of the informed encoder is decoded first using the channel output

of the informed encoder is decoded first using the channel output  and then the codeword

and then the codeword  of the uninformed encoder is decoded using

of the uninformed encoder is decoded using  and

and  . In the limit as

. In the limit as  ,

,  can be decoded first with arbitrary low probability of error if

can be decoded first with arbitrary low probability of error if  satisfies

satisfies  (25)

(25)

where  and

and  . The right hand side of (25) is obtained by calculating the expression

. The right hand side of (25) is obtained by calculating the expression  for the assumed jointly Gaussian distribution and letting

for the assumed jointly Gaussian distribution and letting  . The channel output can be written as

. The channel output can be written as  because

because  for

for  . The estimate of

. The estimate of  using

using  is denoted as

is denoted as  for

for  .

.

Using  and

and  , we can generate a new channel output for decoding

, we can generate a new channel output for decoding  as

as

for  . Since all random variables are identical, we omit the subscript

. Since all random variables are identical, we omit the subscript  for further discussion. The variance of total noise present in elements of

for further discussion. The variance of total noise present in elements of  for decoding

for decoding  is

is  , where

, where  is the variance of

is the variance of  , and

, and  is the error of estimating

is the error of estimating  from

from  . Then the message of the uninformed encoder can be decoded with arbitrarily low probability of error if

. Then the message of the uninformed encoder can be decoded with arbitrarily low probability of error if  for given

for given  and

and  . Even if the variance of the state becomes infinite, nonzero rate for the uninformed encoder can be achieved because the estimation error is finite for

. Even if the variance of the state becomes infinite, nonzero rate for the uninformed encoder can be achieved because the estimation error is finite for  due to the increase of the variance of

due to the increase of the variance of  with the increase of the state variance.

with the increase of the state variance.

Our aim is to minimize the variance of the estimation error  to maximize

to maximize  over

over  and

and  . Since the right-hand side of (25) becomes nonnegative for

. Since the right-hand side of (25) becomes nonnegative for  and

and  , we consider only these values. The variance of the estimation error is decreasing in both

, we consider only these values. The variance of the estimation error is decreasing in both  and

and  and is increasing in the remaining range of

and is increasing in the remaining range of  . Then

. Then  achieves its maximum at

achieves its maximum at  and

and  . If

. If  , so that

, so that  is nonnegative, then

is nonnegative, then

is achievable. In this case, the uninformed encoder fully benefits from actions of the informed encoder, specifically from its auxiliary codewords, even though the variance of interfering state is very large. If  , then

, then  is achievable, where

is achievable, where  . In either cases, GDPC with

. In either cases, GDPC with  is optimal in terms of assisting the uninformed encoder, contrary to the case of finite state variance. This makes sense because if the state has infinite variance, then it is impossible for the informed encoder to explicitly cancel it with finite power.

is optimal in terms of assisting the uninformed encoder, contrary to the case of finite state variance. This makes sense because if the state has infinite variance, then it is impossible for the informed encoder to explicitly cancel it with finite power.

-

(ii)

To investigate how the informed encoder achieves its maximum rate, let us consider successive decoding in the reverse order in which

is decoded first using

is decoded first using  , and then

, and then  is decoded using

is decoded using  and

and  . As

. As  ,

,  can be decoded with arbitrary low probability of error if

can be decoded with arbitrary low probability of error if  . This means that only

. This means that only  is achievable. Then

is achievable. Then  is achievable with

is achievable with  and

and  .

.

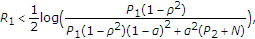

The following proposition gives an outer bound for the Gaussian MAC with one informed encoder as

Proposition 3.

As  , an outer bound for the capacity region of the Gaussian MAC with one informed encoder is the set of rate pairs

, an outer bound for the capacity region of the Gaussian MAC with one informed encoder is the set of rate pairs  satisfying

satisfying

We do not provide a proof of the above proposition because the proof is similar to the converse proof given in Appendix A.2. The outer bound in Proposition 3 is better than the trivial outer bound in Proposition 2 obtained by giving the channel state to the decoder.

Finally, let us discuss the case of strong additive Gaussian channel state, that is,  , with numerical examples. Figures 7 and 8 illustrate the inner bound in Corollary 3 and the outer bound in Proposition 3 in two cases,

, with numerical examples. Figures 7 and 8 illustrate the inner bound in Corollary 3 and the outer bound in Proposition 3 in two cases,  (

( ) and

) and  (

( ), respectively, for

), respectively, for  and

and  . In both cases, the uniformed encoder achieves positive rates from the actions of the informed encoder as discussed above. In the case of

. In both cases, the uniformed encoder achieves positive rates from the actions of the informed encoder as discussed above. In the case of  (

( ), the informed encoder can still achieve

), the informed encoder can still achieve  , though the additive channel state is very strong and is not known to the uninformed encoder, and the informed encoder has finite power.

, though the additive channel state is very strong and is not known to the uninformed encoder, and the informed encoder has finite power.

As  increases and

increases and  , in the strong additive Gaussian state case, the inner bound in Corollary 3 and the outer bound in Proposition 3 meet asymptotically. Thus, we obtain the capacity region for

, in the strong additive Gaussian state case, the inner bound in Corollary 3 and the outer bound in Proposition 3 meet asymptotically. Thus, we obtain the capacity region for  and

and  . For the very large values of

. For the very large values of  , the outer bound given in Proposition 3 is achieved asymptotically with

, the outer bound given in Proposition 3 is achieved asymptotically with  Figure 9 shows the inner bound in the strong additive state case which is also compared with the outer bound in Proposition 3 for the very large values of

Figure 9 shows the inner bound in the strong additive state case which is also compared with the outer bound in Proposition 3 for the very large values of  , that is,

, that is,  .

.

5. Conclusions

In this paper, we considered a state-dependent MAC with state known to some, but not all, encoders. We derived an inner bound for the DM case and specialized to a noiseless binary case using generalized binary DPC. If the channel state is a Bernoulli random variable with

random variable with  , we compared the inner bound in the binary case with a trivial outer bound obtained by providing the channel state to only the decoder. The inner bound obtained by generalized binary DPC does not meet the trivial outer bound for

, we compared the inner bound in the binary case with a trivial outer bound obtained by providing the channel state to only the decoder. The inner bound obtained by generalized binary DPC does not meet the trivial outer bound for  . For

. For  , we obtain the capacity region for binary noiseless case by deriving a nontrivial outer bound.

, we obtain the capacity region for binary noiseless case by deriving a nontrivial outer bound.

For the Gaussian case, we also derived an inner bound using GDPC and an outer bound by providing the channel state to the decoder also. It appears that the uninformed encoder benefits from GDPC because explicit state cancellation is present in GDPC. In the case of strong Gaussian state, that is, the variance of state going to infinity, we also specialized the inner bound and analyzed how the uninformed encoder benefits from the auxiliary codewords of the informed encoder even in this case because explicit state cancellation is not helpful for this case. In the case of strong channel state, we also derived a nontrivial outer bound which is tighter than the trivial outer bound. These bounds asymptotically meet if  and

and  . From results in the special cases of both the binary case and the Gaussian case, we note that the inner bounds meet the nontrivial outer bounds. From the results and observations in this paper, we would like to conclude that we are not able to show that random coding techniques and inner bounds in this paper achieve the capacity region due to lack of nontrivial outer bounds in all cases for this problem.

. From results in the special cases of both the binary case and the Gaussian case, we note that the inner bounds meet the nontrivial outer bounds. From the results and observations in this paper, we would like to conclude that we are not able to show that random coding techniques and inner bounds in this paper achieve the capacity region due to lack of nontrivial outer bounds in all cases for this problem.

Appendix

A.1. Proof of Theorem 1

In this section, we construct a sequence of codes  with

with  as

as  if

if  satisfies (9). The random coding used in this section is a combination of Gel'fand-Pinsker coding [16] and coding for MAC [31]. This random coding is not a new technique but it is included for completeness. Fix

satisfies (9). The random coding used in this section is a combination of Gel'fand-Pinsker coding [16] and coding for MAC [31]. This random coding is not a new technique but it is included for completeness. Fix  and take

and take  .

.

a. Encoding and Decoding

Encoding

The encoding strategy at the two encoders is as follows. Let  ,

,  , and

, and  . At the informed encoder, where the state is available, generate

. At the informed encoder, where the state is available, generate  sequences

sequences  , whose elements are drawn i.i.d. with

, whose elements are drawn i.i.d. with  , for each time-sharing random sequence

, for each time-sharing random sequence  , where

, where  , and

, and  . Here,

. Here,  indexes bins and

indexes bins and  indexes sequences within a particular bin

indexes sequences within a particular bin  . For encoding, given state

. For encoding, given state  , time-sharing sequence

, time-sharing sequence  and message

and message  , look in bin

, look in bin  for a sequence

for a sequence  , such that

, such that  . Then, the informed encoder generates

. Then, the informed encoder generates  from

from  according to probability law

according to probability law  .

.

At the uninformed encoder, sequences  , whose elements are drawn i.i.d. with

, whose elements are drawn i.i.d. with  , are generated for each time-sharing sequence

, are generated for each time-sharing sequence  , where

, where  . The uninformed encoder chooses

. The uninformed encoder chooses  to send the message

to send the message  for a given time-sharing sequence

for a given time-sharing sequence  and sends the codeword

and sends the codeword  .

.

Given the inputs and the state, the decoder receives  according to conditional probability distribution

according to conditional probability distribution  . It is assumed that the time-sharing sequence

. It is assumed that the time-sharing sequence  is noncausally known to both the encoders and the decoder.

is noncausally known to both the encoders and the decoder.

Decoding

The decoder, upon receiving the sequence  , chooses a pair

, chooses a pair  ,

,  ,

,  , and

, and  such that

such that  . If such a pair exists and is unique, the decoder declares that

. If such a pair exists and is unique, the decoder declares that  . Otherwise, the decoder declares an error.

. Otherwise, the decoder declares an error.

b. Analysis of Probability of Error

The average probability of error is given by

The first term,  , and the second term,

, and the second term,  , in the right-hand side expression of (A.2) go to zero as

, in the right-hand side expression of (A.2) go to zero as  by the strong asymptotic equipartition property (AEP) [31].

by the strong asymptotic equipartition property (AEP) [31].

Without loss of generality, we can assume that  is sent, time-sharing sequence is

is sent, time-sharing sequence is  , and state realization is

, and state realization is  . The probability of error is given by the conditional probability of error given

. The probability of error is given by the conditional probability of error given  ,

,  , and

, and  .

.

(i) Let  be the event that there is no sequence

be the event that there is no sequence  such that

such that  . For any

. For any  and

and  generated independently according to

generated independently according to  and

and  , respectively, the probability that there exists at least one

, respectively, the probability that there exists at least one  such that

such that  is greater than

is greater than  for

for  sufficiently large. There are

sufficiently large. There are  number of such

number of such  's in each bin. The probability of event

's in each bin. The probability of event  , the probability that there is no

, the probability that there is no  for a given

for a given  in a particular bin, is therefore bounded by

in a particular bin, is therefore bounded by

Taking the natural logarithm on both sides of (A.3), we obtain

where  follows from the inequality

follows from the inequality  . From (A.4),

. From (A.4),  as

as  .

.

Under the event  , we can also assume that a particular sequence

, we can also assume that a particular sequence  in bin 1 is jointly strongly typical with

in bin 1 is jointly strongly typical with  . Thus, codewords

. Thus, codewords  corresponding to the pair

corresponding to the pair  and

and  corresponding to

corresponding to  are sent from the informed and the uninformed encoders, respectively.

are sent from the informed and the uninformed encoders, respectively.

(ii) Let  be the event that

be the event that

The Markov lemma [31] ensures jointly strong typicality of  with high probability if

with high probability if  is jointly strongly typical and

is jointly strongly typical and  is jointly strongly typical. We can conclude that

is jointly strongly typical. We can conclude that  as

as  .

.

(iii) Let  be the event that

be the event that

The probability that  for (

for ( and

and  ), or (

), or ( and

and  ), is less than

), is less than  for sufficiently large

for sufficiently large  . There are approximately

. There are approximately  (exactly

(exactly  ) such

) such  sequences in the codebook. Thus, the conditional probability of event

sequences in the codebook. Thus, the conditional probability of event  given

given  and

and  is upper bounded by

is upper bounded by

From (A.7),  as

as  if

if  and

and  .

.

(iv) Let  be the event that

be the event that

for  . The probability that

. The probability that  for

for  is less than

is less than  for sufficiently large

for sufficiently large  . There are approximately

. There are approximately  such

such  sequences in the codebook. Thus, the conditional probability of event

sequences in the codebook. Thus, the conditional probability of event  given

given  and

and  is upper bounded by

is upper bounded by

From (A.9),  as

as  if

if  .

.

(v) Finally, let  be the event that

be the event that

for ( and

and  ), or (

), or ( and

and  )), and

)), and  . The probability that

. The probability that  for

for  ,

,  , and

, and  is less than

is less than  , for sufficiently large

, for sufficiently large  . There are approximately

. There are approximately  sequences

sequences  and

and  sequences

sequences  in the codebook. Thus, the conditional probability of event

in the codebook. Thus, the conditional probability of event  given

given  and

and  is upper bounded by

is upper bounded by

From (A.11), the  as

as  if

if  .

.

In terms of these events,  in (A.2) can be upper bounded via the union bound, and the fact that probabilities are less than one, as

in (A.2) can be upper bounded via the union bound, and the fact that probabilities are less than one, as

From (A.12), it can be easily seen that  as

as  . Therefore, the probability of error

. Therefore, the probability of error  goes to zero as

goes to zero as  from (A.2) and completes the proof.

from (A.2) and completes the proof.

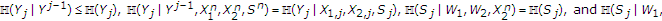

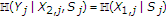

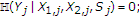

A.2. Converse for the Capacity Region in Corollary 2

In this section, we show that  satisfies (14) for any given sequence of binary codes

satisfies (14) for any given sequence of binary codes  for the noiseless binary state-dependent MAC with

for the noiseless binary state-dependent MAC with  and one informed encoder satisfying

and one informed encoder satisfying  .

.

Let us first bound the rate of the uninformed encoder as follows:

where

-

(a)

follows from Fano's inequality and

as

as  ;

; -

(b)

follows from the fact that

and

and  are deterministic functions of

are deterministic functions of  and

and  , respectively, the memoryless property of the channel, and

, respectively, the memoryless property of the channel, and

-

(c)

follows from the fact that

is a Bernoulli

is a Bernoulli satisfying

satisfying  ;

; -

(d)

follows from the fact that the binary entropy function is a concave function;

-

(e)

follows from the fact that the binary entropy function is a monotone increasing function in the interval between

and

and  , and

, and  .

.

An upper bound on  can be obtained as follows:

can be obtained as follows:

where

-

(a)

follows from Fano's inequality and

as

as  ;

; -

(b)

follows from the fact that

and

and  are deterministic functions of

are deterministic functions of  and

and  , respectively, and the memoryless property of the channel;

, respectively, and the memoryless property of the channel; -

(c)

follows from the fact that

-

(d)

follows from the fact that

-

(e)

follows from the fact that

and

and

-

(f)

follows from the fact that the

is a Bernoulli

is a Bernoulli random variable with

random variable with  ; and

; and  is correlated to

is correlated to  with

with  and

and  satisfying

satisfying (A15)

(A15) -

(g)

follows from the fact that the term

is maximized under the constraint

is maximized under the constraint  for values

for values  and

and  and the maximum value of the term

and the maximum value of the term  is

is  ;

; -

(h)

follows from the concavity property of the binary entropy function;

-

(i)

follows from the fact that the binary entropy function is a monotone increasing function in the interval between

and

and  , and

, and  .

.

From (A.13) and (A.14), we can conclude that the rate pair  satisfies (14) by letting

satisfies (14) by letting  go to

go to

We denote the set of jointly strongly typical sequences [31, 34] with distribution  as

as  . We define

. We define  as the following:

as the following:

References

Chen B: Design and analysis of digital watermarking, information embedding, and data hiding systems, Ph.D. thesis. Massachusetts Institute of Technology, Cambridge, Mass, USA; 2000.

Chen B, Wornell GW: Quantization index modulation: a class of provably good methods for digital watermarking and information embedding. IEEE Transactions on Information Theory 2001, 47(4):1423-1443. 10.1109/18.923725

Moulin P, O'Sullivan JA: Information-theoretic analysis of information hiding. IEEE Transactions on Information Theory 2003, 49(3):563-593. 10.1109/TIT.2002.808134

Cohen AS, Lapidoth A: The Gaussian watermarking game. IEEE Transactions on Information Theory 2002, 48(6):1639-1667. 10.1109/TIT.2002.1003844

Swanson MD, Kobayashi M, Tewfik AH: Multimedia data-embedding and watermarking technologies. Proceedings of the IEEE International Conference on Communications (ICC '98), June 1998, Toronto, Canada 2: 823-827.

Caire G, Shamai S: On achievable throughput of a multi-antenna Gaussian broadcast channel. IEEE Transactions on Information Theory 2003, 49(7):1691-1706. 10.1109/TIT.2003.813523

Kalker T, Willems FMJ: Capacity bounds and constructions for reversible data-hiding. Proceedings of the 14th International Digital Signal Processing (DSP '03), January 2003, Santa Clara, Calif, USA 71-76.

Mitola J: Cognitive radio: an integrated agent architecture for software defined radio, Ph.D. dissertation. Royal Institute of Technology, Stockholm, Sweden; 2000.

Devroye N, Mitran P, Tarokh V: Achievable rates in cognitive radio channels. IEEE Transactions on Information Theory 2006, 52(5):1813-1827.

Jovicic A, Viswanath P: Cognitive radio: an information-theoretic perspective. submitted to IEEE Transactions on Information Theory

Shannon CE: Channels with side information at the transmitter. IBM Journal of Research and Development 1958, 2(4):289-293.

Salehi M: Capacity and coding for memories with real-time noisy defect information at encoder and decoder. IEE Proceedings I: Communications, Speech and Vision 1992, 139(2):113-117. 10.1049/ip-i-2.1992.0016

Caire G, Shamai S: On the capacity of some channels with channel state information. IEEE Transactions on Information Theory 1999, 45(6):2007-2019. 10.1109/18.782125

Kusnetsov AV, Tsybakov BS: Coding in a memory with defective cells. Problemy Peredachi Informatsii 1974, 10(2):52-60.

Heegard C, Gamal AE: On the capacities of computer memories with defects. IEEE Transactions on Information Theory 1983, 29(5):731-739. 10.1109/TIT.1983.1056723

Gel'fand SI, Pinsker MS: Coding for channel with random parameters. Problems of Control Theory 1980, 9(1):19-31.

Cover TM, Chiang M: Duality between channel capacity and rate distortion with two-sided state information. IEEE Transactions on Information Theory 2002, 48(6):1629-1638. 10.1109/TIT.2002.1003843

Costa MHM: Writing on dirty paper. IEEE Transactions on Information Theory 1983, 29(3):439-441. 10.1109/TIT.1983.1056659

Gel'fand SI, Pinsker MS: On Gaussian channels with random parameters. Proceedings of the IEEE International Symposium on Information Theory (ISIT '83), September 1984, St. Jovite, Canada 247-250.

Kim Y-H, Sutivong A, Sigurjónsson S: Multiple user writing on dirty paper. Proceedings of the IEEE International Symposium on Information Theory (ISIT '04), June-July 2004, Chicago,Ill, USA 534.

Somekh-Baruch A, Shamai S, Verdú S: Cooperative encoding with asymmetric state information at the transmitters. Proceedings of the Allerton Conference on Communication, Control, and Computing, October 2006, Monticello, Ill, USA

Somekh-Baruch A, Shamai S, Verdú S: Cooperative multiple access encoding with states available at one transmitter. Proceedings of the IEEE International Symposium on Information Theory (ISIT '07), June 2007, Nice, France

Kotagiri S, Laneman JN: Multiaccess channels with state known to one encoder: a case of degraded message sets. Proceedings of the IEEE International Symposium on Information Theory (ISIT '07), June 2007, Nice, France

Somekh-Baruch A, Shamai S, Verdú S: Cooperative multiple access encoding with states available at one transmitter. submitted to IEEE Transactions on Information Theory

Philosof T, Khisti A, Erez U, Zamir R: Lattice strategies for the dirty multiple access channel. Proceedings of the IEEE International Symposium on Information Theory (ISIT '07), June 2007, Nice, France 386-390.

Cemal Y, Steinberg Y: The multiple-access channel with partial state information at the encoders. IEEE Transactions on Information Theory 2005, 51(11):3992-4003. 10.1109/TIT.2005.856981

Steinberg Y, Shamai S: Achievable rates for the broadcast channel with states known at the transmitter. Proceedings of the IEEE International Symposium on Information Theory (ISIT '05), September 2005, Adelaide, Australia 2184-2188.

Steinberg Y: Coding for the degraded broadcast channel with random parameters, with causal and noncausal side information. IEEE Transactions on Information Theory 2005, 51(8):2867-2877. 10.1109/TIT.2005.851727

Khisti A, Erez U, Wornell G: Writing on many pieces of dirty paper at once: the binary case. Proceedings of the IEEE International Symposium on Information Theory (ISIT '04), June-July 2004, Chicago, III, USA 535.

Kotagiri SP: State-dependent networks with side information and partial state recovery, Ph.D. dissertation. University of Notre Dame, Notre Dame, Ind, USA; 2007.

Cover TM, Thomas JA: Elements of Information Theory. John Wiley & Sons, New York, NY, USA; 1991.

Zamir R, Shamai S, Erez U: Nested linear/lattice codes for structured multiterminal binning. IEEE Transactions on Information Theory 2002, 48(6):1250-1276. 10.1109/TIT.2002.1003821

Gallager RG: Information Theory and Reliable Communication. John Wiley & Sons, New York, NY, USA; 1968.

Csiszár I, Körner J (Eds): Information Theory: Coding Theorems for Discrete Memoryless Systems. Academic Press, New York, NY, USA; 1981.

Acknowledgments

Part of this work was published in Allerton Conference on Communications and Control, Monticello, Ill, USA, October 2004. This work has been supported in part by NSF grants CCF05-46618 and CNS06-26595, the Indiana 21st Century Fund, and a Graduate Fellowship from the Center for Applied Mathematics at the University of Notre Dame.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License (https://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Kotagiri, S.P., Laneman, J.N. Multiaccess Channels with State Known to Some Encoders and Independent Messages. J Wireless Com Network 2008, 450680 (2008). https://doi.org/10.1155/2008/450680

Received:

Accepted:

Published:

DOI: https://doi.org/10.1155/2008/450680

in Theorem 1 is convex due to the auxiliary time-sharing random variable

in Theorem 1 is convex due to the auxiliary time-sharing random variable  .

. of Theorem 1 can also be obtained by time-sharing between two successive decoding schemes, that is, decoding one encoder's message first and using the decoded codeword and the channel output to decode the other encoder's message. On one hand, consider first decoding the message of the informed encoder. Following [

of Theorem 1 can also be obtained by time-sharing between two successive decoding schemes, that is, decoding one encoder's message first and using the decoded codeword and the channel output to decode the other encoder's message. On one hand, consider first decoding the message of the informed encoder. Following [ , we can decode the codeword

, we can decode the codeword  of the informed encoder with arbitrarily low probability of error. Now, we use

of the informed encoder with arbitrarily low probability of error. Now, we use  along with

along with  to decode

to decode  . Under these conditions, if

. Under these conditions, if  , then we can decode the message of the uninformed encoder with arbitrarily low probability of error. On the other hand, if we change the decoding order of the two messages, the constraints are

, then we can decode the message of the uninformed encoder with arbitrarily low probability of error. On the other hand, if we change the decoding order of the two messages, the constraints are  and

and  . By time-sharing between these two successive decoding schemes and taking the convex closure, we can obtain the inner bound

. By time-sharing between these two successive decoding schemes and taking the convex closure, we can obtain the inner bound  of Theorem 1.

of Theorem 1.

,

,

, and

, and

.

.

though the channel has maximum entropy state. Let us investigate how the uninformed encoder can benefit from the informed encoder's actions even in this case using successive decoding in which

though the channel has maximum entropy state. Let us investigate how the uninformed encoder can benefit from the informed encoder's actions even in this case using successive decoding in which  is decoded first using

is decoded first using  and then

and then  is decoded using

is decoded using  and

and  . The informed encoder applies the standard binary DPC, that is,

. The informed encoder applies the standard binary DPC, that is,  and

and  in generalized binary DPC, to generate its codewords, and the uninformed encoder uses a Bernoulli

in generalized binary DPC, to generate its codewords, and the uninformed encoder uses a Bernoulli random variable to generate its codewords, where

random variable to generate its codewords, where  . In the case of maximum entropy state,

. In the case of maximum entropy state,  can be decoded first with arbitrary low probability of error if

can be decoded first with arbitrary low probability of error if  satisfies

satisfies

and

and  . The channel output can be written as

. The channel output can be written as  because

because  for

for  . Using

. Using  , we can generate a new channel output for decoding

, we can generate a new channel output for decoding  as

as

. Since there is no binary noise present in

. Since there is no binary noise present in  for decoding

for decoding  , the message of the uninformed encoder can be decoded with arbitrarily low probability of error if

, the message of the uninformed encoder can be decoded with arbitrarily low probability of error if  for

for  satisfying both

satisfying both  and

and  .

. can be written as

can be written as

, we can achieve

, we can achieve  as if there were no state in the channel, though the maximum entropy binary state is present in the channel and the state is not known to the uninformed encoder. If

as if there were no state in the channel, though the maximum entropy binary state is present in the channel and the state is not known to the uninformed encoder. If  , the uninformed encoder can still achieve positive rates, that is,

, the uninformed encoder can still achieve positive rates, that is,  .

. using successive decoding in the reverse order, that is,

using successive decoding in the reverse order, that is,  is decoded first using

is decoded first using  and then

and then  is decoded using

is decoded using  and

and  . If

. If  ,

,  can be decoded with arbitrary low probability of error if

can be decoded with arbitrary low probability of error if  . This means that only

. This means that only  is achievable. Then

is achievable. Then  is achievable with

is achievable with  and

and  .

.

, and

, and

.

.

. In GDPC,

. In GDPC,  and

and  are jointly correlated with correlation coefficient

are jointly correlated with correlation coefficient  , whereas in the standard DPC, they are uncorrelated. If the channel input

, whereas in the standard DPC, they are uncorrelated. If the channel input  is negatively correlated with the channel state

is negatively correlated with the channel state  , then GDPC can be viewed as partial state cancellation followed by standard DPC. To see this, let us assume that

, then GDPC can be viewed as partial state cancellation followed by standard DPC. To see this, let us assume that  is negative and denote

is negative and denote  as a linear estimate of

as a linear estimate of  from

from  under the minimum mean square error (MMSE) criterion. Accordingly,

under the minimum mean square error (MMSE) criterion. Accordingly,  . We can rewrite the auxiliary random variable

. We can rewrite the auxiliary random variable  as follows:

as follows:

,

,  can be viewed as the remaining state after state cancellation using power

can be viewed as the remaining state after state cancellation using power  , and

, and  is error with variance

is error with variance  and is uncorrelated with

and is uncorrelated with  . GDPC with negative correlation coefficient

. GDPC with negative correlation coefficient  can be interpreted as standard DPC with power